How “Midstream Bivalence” Leads to Reckless Philosophy

In philosophy, we all love the idea of discovering a new powerful argument, or finding a formidable refutation, or figuring out a beautiful new articulation, or validating our preconceived beliefs. We crave something confident & conclusive. And so any bug that both provides that confidence and slips past even very intelligent people is going to turn up everywhere.

Here’s one of them.

“Midstream bivalence” is a nickname for the following:

- Several premises along a syllogism enjoy large confidence but not total confidence,

and - Those premises are treated bivalently (shoehorned into either “true” or “false”),

and - When deciding whether to count those premises as “true” or “false,” we choose to “round up” that large confidence (but not total confidence) to “true.”

Those of us who have done data analysis know that these sorts of “rounding errors,” when buried within a process, are notorious for the bad and/or overconfident conclusions they can yield. But it takes some experience and/or examples to see the issue intuitively.

A syllogistic conclusion is a conjunction of all of its premises. Consider some logic within a spy story featuring 3 independent agents:

- A: Larry is on our side.

- B: Julie is on our side.

- C: Kris is on our side.

- D: Anyone on our side will fire their flare when the signal is sent.

- E: Thus, upon sending the signal, all three of Larry, Julie, and Kris will fire their flares.

This can be expressed as A&B&C&D→E.

Let’s say we’re all largely confident in each of A, B, C, and D independently. And so, we “round up” to “true” on each. This gives us T&T&T&T→T. Nice!

But… is this “okay”? It feels kind of sloppy, but it can’t be that bad, right?

Well…

… turns out it’s bad.

There are independent chances, albeit small, that each of Larry, Julie, and Kris might be double agents. Let’s say a 10% chance each.

Furthermore, there’s a chance that the agent may miss the signal for whatever reason — incapacitated, asleep, distracted, etc. Let’s say that chance is 10%… but note that it’s an independent possibility for each agent.

Now things look more like this:

- A: Larry is on our side. (P=0.9)

- B: Julie is on our side. (P=0.9)

- C: Kris is on our side. (P=0.9)

- D: If Larry is on our side, he will fire his flare when the signal is sent (P=0.9).

- E: If Julie is on our side, she will fire her flare when the signal is sent (P=0.9).

- F: If Kris is on our side, she will fire her flare when the signal is sent (P=0.9).

- G: Thus, upon sending the signal, all three of Larry, Julie, and Kris will fire their flares.

Now, if we “round up” to P=1 on each premise, then our final conclusion is P=1 as well.

But what if we refuse to “round up”? Well, any single one of the 6 premises makes the conclusion false. The way we account for the growing epistemic space of possible failure is by multiplying those probabilities together:

0.9*0.9*0.9*0.9*0.9*0.9=0.53(?!)

Oh dear.

Now what looked like a sure bet has become a coinflip.

That’s a big difference!

That’s a big… problem.

Check out the quick 5m video below for more about the scope of this problem and how it drives some of the most notorious, endless conversations in philosophy:

Bonus

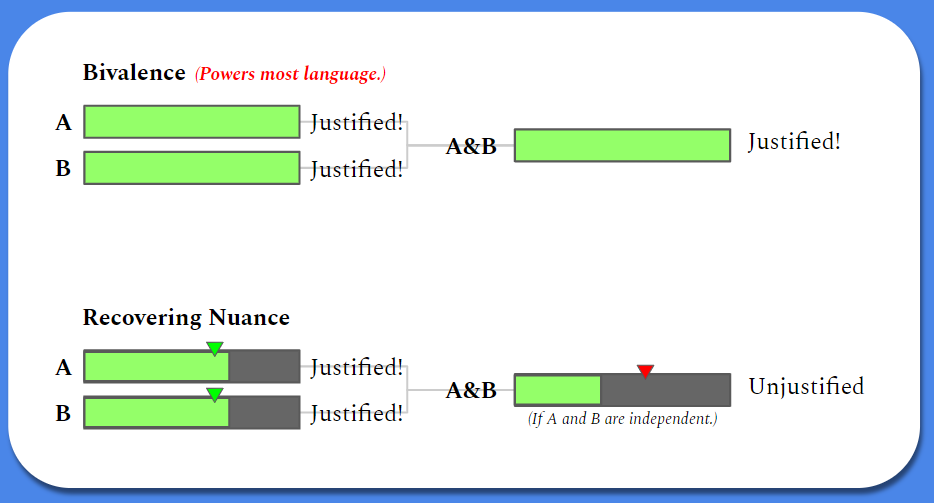

Here’s a quick diagram that quickly illustrates how, even if we define our threshold of confidence for “justification” (to “cash out” in a bivalent way), the practice of bivalent “rounding” midstream can make the difference between calling something justified or unjustified:

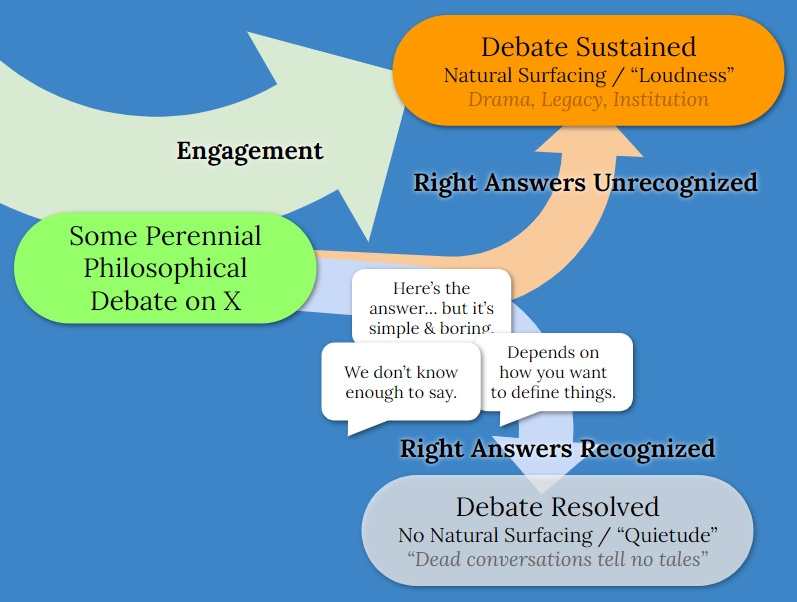

And here’s a diagram that illustrates the issue discussed in the back half of the video — selection pressures “reward” bold conclusions and obscure the fact that we already have the answers to most of the hottest (historically, and continually) philosophical debates: Usually these answers are, “It depends on how you want to define the terms,” and, “We don’t know and probably can’t know.”

These right answers are boring, they can’t be capitalized, and they end conversations. These three nails in the memetic coffin help explain philosophy’s problem with heuristic capture (in short, those whose careers & names are wholly invested in there being “more to it” cannot be trusted when they say “there’s more to it,” despite them being genuinely honest, intelligent, respectable, and endorsed by their peers — heuristics we like to lean on).

Recent Comments